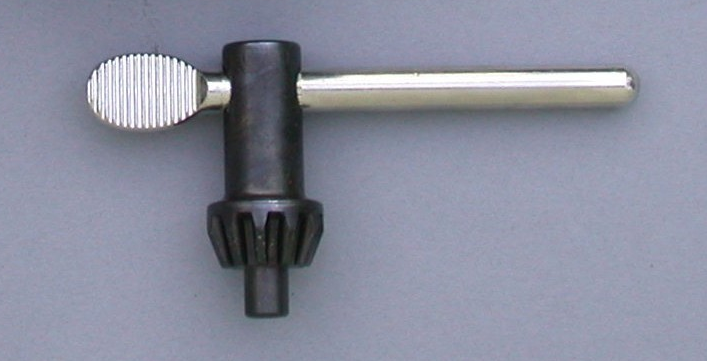

One of the promising undergraduate students within the lab I worked in at Wisconsin was machining a part one day on a mill. He passed on the unsupervised lab-specific machine shop for risk of safety and was in the established student shop in the College — a fancy facade of a facility with a carefully organized tool closet and a windowed observation office where the head machinist, a disliked authoritarian of a person with decades of experience, could watch the shop. The student was very sharp, but left the chuck key in the mill head and turned it on. The key spun around, flew out, and took with it two of his fingers. As he’s holding his bloodied hand the head of the shop comes running out and begins yelling at him, “why did you do that!!” This would surely be a mark on his safety record. The student, in shock, ran away to the hallway outside where other students applied paper towels to his hand and helped him to the hospital.

The problem here was not a lack of authority and control, or severity of consequences, but a lack of community connection and continuous improvement in the shop practices. A chuck key with an ejector spring prevents people from leaving it in the chuck, but is more expensive. The buddy system with a mentor can help spot some of these mistakes, whatever they may be. While these improvements may seem obvious to some, common sense isn’t so common.

The WSU administration, led by the Office of Research, is undergoing an effort to re-emphasize and improve safety at our institution. I was recently informed by my chair that “at least one significant incident occurs at a university laboratory every month.” And OSHA (Occupational Safety & Health Administration) shows “that researchers are 11 times more likely to get hurt in an academic lab than in an industrial lab.” What is it about our authoritarian-legalistic structure of academic bureaucracy that naturally leads to this sub-par performance in such a critical area, and what can we do to improve?

Why Universities have a hard time with safety

I’ve written previously about how Universities evolved tree-like hierarchies. Nearly all of the reward system and feedback loops are geared towards promoting researchers to become power-driven authority leaders in their fields, which reinforces the extant authoritarian-legalistic system structure. The problem with these structures is communication. There is a very low amount of duplex communication, i.e. real conversations,,, talk. There just isn’t time for an administrator to sit down and spend quality time actually working with someone in a lab to mentor them — let alone knowing the people in their division. This results in a natural disconnection and un-grounding of administration from the people actually doing the work. I recently asked one of my friends, who is an administrator: “When was the last time you actually got a training by sitting down and doing the activity with someone, or a group of administrators?” He couldn’t remember a workshop that wasn’t primarily the traditional one way data dump.

Couple the difficulties in communication with declining resources, increasing performance pressures, and a 2-5 year graduation timer on all your primary lab personnel, and you have a recipe for a safety nightmare.

This means that it’s all too common to hear safety bulletins from administrators along the lines of the following: “make a new resolution to make this year accident free,” or to add “safety to annual performance evaluations,” or to “please report even the minor accidents,” and emphasis that “failure to report an incident… does result in consequences.” This is the easiest thing for an administrator in a power structure to do. Aside from invasive intrusions into labs, what else can they do? But this leads to other problems.

I once knew an administrator who still conducted research in their lab. One day, a post-doc accidentally mixed two substances in the fume hood, leading to an explosion that destroyed the hood. The administrator, under pressure to reduce accidents in their unit, did not report the incident to others as they were the only required chain of reporting. Months later, a young faculty member in their unit had a similar incident that destroyed another fume hood. A year later, a similar accident sent 16 people to the hospital at a neighboring institution.

When framed like this, the lack of communication almost seems criminal. Clearly, the sad reality is that these authoritative declarations coupled with punishments, within our communication-deficient authoritarian-legalistic system structure, can lead to corruption and actually be detrimental to the broader cause they intend to help. This command and control approach boils down to what is known as the deterrence hypothesis: the introduction of a penalty that leaves everything else unchanged will reduce the occurrence of the behavior subject to the penalty. I’ve previously written about the problems of applying the deterrence hypothesis to grading of coursework. In this case, safety is connected to my performance evaluation — which is primarily used for raise allocations and promotion. So in short, if an accident happens, my status and pay within the institution will suffer. So does this feedback mechanism promote better safety or lack of reporting — the most direct effect is lack of reporting. This is also presumes that the permanent disabling damage from losing fingers or another accident is not deterrence enough — the approach assumes that faculty delegate all risks to students rather than doing the activity themselves.

In a famous study titled, “A fee is a price” researchers investigated the efficacy of the deterrence hypothesis at mitigating the undesirable behavior of picking a child up late from daycare. This is low — abusing the personal time of a lower-paid caretaker charged with the health and well being of your child. In many ways this parallels the minor accidents, cuts, and knuckle bangs we’re being asked to report. In order to couple these to performance evaluations, a non-arbitrary metric must be created to decide how big the penalty, or price, should be. Contrary to expectations, the researchers performing the study found that adding the penalty actually increased the negative behavior that it intended to deter. The researchers deduced that the penalty became a price — if I’m late, I’ll pay the $20 and everything is ok — regardless of whether the caretaker had other plans. Perhaps the most troubling finding from the study was once the penalty was systemized, the bad behavior continued regardless of whether the penalty was removed or not. Once you marginalize or put a fee on a person, it’s tough to treat them as a person with rights and dignity again.

I’ve seen this play out many times with daycares, teams, and communities I’ve been involved. Reliably the diminishing of people and disruption of personal connection leads to the demise and under performance of the organization. When an authoritarian is presented with this evidence contrary to their belief, they reliably counter with, “oh I’ll make the penalty severe enough to deter the behavior.” What else can they do? This approach, in the absence of appropriate developmental scaffolding, leads to a depressed environment adverse to uncertainty. Everyone becomes afraid to report safety, afraid to discuss safety, afraid to try new things and push the limits (isn’t trying new things and pushing the limits called research?) — often simply because trying new things is no longer the norm. When something is not the norm, it becomes an uncertainty risk and threat.

I once was having a discussion with an administrator about a new makerspace on campus. This prompted the statement, “But we’ll never be able to control the safety!” To this I immediately responded: 3D printers are robotic hot glue guns with safety shrouds! Every campus in the US has a gym with a squat rack (people put hundreds of pounds on their back on a daily basis with poor form), climbing wall (someone could fall!), pool (but what if someone drowned!), and a hammer/discuss/shotput/javelin toss (yikes!).

Arbitrary targeting of risk/blame is another characteristic of authoritarian/legalistic organizations because they lack established heuristics, a.k.a. processes, to work through safety scaffolding of new activities. Shot put and the hammer toss are established activities that our culture has normed to, where the risk in developing the established safety protocol was encumbered centuries ago. Less of a need for an administrator to CYA. Moreover, a command and control approach isn’t what makes them safe — it’s connections and discussions with people. The disincentive for not using the squat rack correctly is chronic back pain, something I deal with on a daily basis. That risk didn’t stop me from squatting incorrectly! The problem was ineffective coaching/scaffolding. Telling the coaches to coach better won’t explicitly fix that. And we can’t always rely on starting a new facility fresh with appropriate safety from the beginning.

I once attended a safety seminar, led by a well respected researcher at another academic institution. The researcher described the brand new building they were having built, and all of the safety protocols they implemented to make it safe. Afterwords I asked the researcher their approach for improving safety within established student clubs. The response stunned me: “I’m not really sure. We have another building for that. We never allow students to work after hours unsupervised.”

They had nearly entirely avoided teaching intrinsic safety culture! The students were never allowed autonomy to make decisions! I told myself I’ll never bring in a student from that institution. This exemplifies what happens when we are granted huge resources without having to perform or evolve to a level that justifies them like in industry. It was almost Orwellian. Certainly not the future our society and university needs.

After having a string of safety incidents in their unit, an administrator and safety board required every club and lab to have a “designated safety officer” or a designated authority to control safety for the group. After a few months in this position, one lab’s “safety officer” lamented to me, “Sometimes I need to be the bad guy because people don’t take safety seriously. But it gets tiring. They dislike me for it, blame me when stuff goes wrong, and they still don’t take safety upon themselves.”

This is directly analogous to the problem of quality control faced in Lean Manufacturing. In Lean, the question comes up of whether something you’ve manufactured meets the design specification. Do you hire a quality control czar to stop production if product starts coming out not to spec or unsafe? Ever heard a story of someone who was frustrated with the quality cop coming over to tell them things were wrong yet providing no explanation what was wrong or how to fix it? Moreover, the only way to ensure 100% quality/safety is 100% inspection — not a sustainable or scale-able approach. The Lean approach is to design quality/safety control into the production process — if the part can’t be made wrong/unsafe, it’s much easier to achieve 100% safety/quality. Moreover, if everyone is responsible for checking safety/quality during the production process, you just made everyone in your group a safety officer and multiplied the odds of spotting a risk before it’s realized.

Another common characteristic of authoritarian-legalistic approaches to safety is the posting of negative signage/reminders. “No ___ allowed.” “Don’t do this!” etc. Here’s a great counter example from Seth Godin titled, “How to make a sign.” The problem is we become numb to these negative associations and quit paying attention. That’s why we have “Did you know?” documents in our lab that just describe the right process for doing something. We try to include a funny meme at the top to get people to positively associate and look at these. Here’s an example posted near a compressed gas bottle area:

It boggles my mind why lab leads do not have safety procedures posted by all key lab processes and equipment. It’s really simple — if something goes wrong you change the procedure. Changing the procedure is orders of magnitude cheaper and easier than changing the equipment or personnel. It’s therefore much easier to continuously improve procedure.

So we’ve shown through multiple ways the safety shortcomings of traditional authoritarian-legalistic bureaucratic structures. How do we get beyond these to cultivate a sustaining community and culture of safety within such institutions?

Let’s talk about Scaffolding Layers of Safety

In short, the real solution to safety is performance based funds from a diverse array of sources, like in industry. This naturally dovetails with a diverse, sustaining and supporting lab community. If you’re operating efficiently and effectively, you can’t stand the loss of a well trained person, even for a few days. But that’s a chicken or the egg conundrum for us in universities. I’ve written previously about the challenges and tips for building sustaining lab communities. It’s not easy! In short, you have to scaffold multiple orthogonal value sets. But the end result can literally be a life-saver!

About 6 months ago we had a near-miss hydrogen venting event in the lab caused by a power failure and a pressure relief valve freezing shut. Because we had multiple layers of safety engineered into the experiment, and multiple layers that we could communicate within the lab, and university, a potential tragedy was avoided. In the end, instead of being reprimanded, we got a 5 month extension on the project, upgrades to the lab, and were told by administrators, “This was not an accident because you are working hard to do everything right.”

In a recent post I provided a scaffold to grow agency in engineering education. The key premise being that values change, and we need a scaffold that relates to many different value sets. Safety is no different. This provides the “layered” approach to safety that is popular in software security and other forward thinking fields. Here are several levels and examples of what we do in the HYPER lab to help activate the appropriate values:

Authority: Typical to most research labs. A grad student, or preferably a team of 2 grad students and 2 undergrads are responsible for maintaining an experimental or fabrication facility. Their names and pictures are associated with the project both in person and virtually through the lab website. They also are given an instant communication channel that the lab can see specific to the experiment/facility. Notice I was careful to connect authority to responsibility and have carefully steered clear of the power-command authority traditional of academia.

Legalistic: Each experiment has a Safety Protocols and Procedures manual that is continually refined (send me a note if you want to see ours, I don’t want to display online in case of nefarious actions.) The safety manual includes a Failure Modes and Effects Analysis (FMEA) that predicts all of the likely safety issues and emergency protocols. We implement the buddy system for changes to experiments and manuals — you need to have someone else there to approve. We also are continuing to develop a common lab-rules, standards, and values banner that goes above the doors to spaces. We are working to develop standard trainings for the right and wrong ways to utilize plumbing fittings and seals common in our work. We emphasize use of engineering standards wherever applicable.

Performance: Once a student is proficient with the responsibilities, literature, trainings, and practices in an area, they develop a did-you-know? heuristic process document. This informs people of the necessary steps unique to the space for accomplishing a task. Students at this level are expected to begin bringing in their own resources and recruit their own students to working on their project. We are also implementing a traveling safety award for the lab and tracking days without incidents.

Community: All of the lab members (without me) go to lunch together once a week. In addition we work together as a lab for a 3 hour time-block once a week on lab community builds and needs, including safety. This is greatly enabled by allowing all of the students to contribute to our community website (this site) and our Slack message board. We offer tours of our lab as frequently as possible to gain critical feedback and advise from potential stakeholders or partner labs. I’ve written previously about Tradings Places and Ways.

Systemic: We’ve established the expectation of all lab members to contribute and cultivate our system and community by looking for and enhancing restoring feedback loops that improve our efficiency in each of these levels. We do this by building our people from the ground up — we seldom import talent into our culture. This is very similar to Toyota and other lean production environments. No surprise, our lab has the Lean Philosophy of 5-S posted throughout: Sort, Sweep, Systemize, Standardize, Sustain.

So far things seem to be working. We have equipment and builds that I’m sure my colleagues think are ludicrously difficult and safety risks. We’re the only lab in the country that focuses on cryogenic hydrogen — which has the highest thermal, fluid and chemical power gradients. Hydrogen should not be taken lightly! But I also know that the students are developing in incredible ways and coming together as a community to make it happen, safely. One of the reasons I know this is the fact that they’re not afraid to talk about safety, and they are having fun with it!

So let’s talk about safety! Send me your comments and suggestions: jacob.leachman<at>wsu.edu